Opened at 2012-02-14T11:16:21Z

Closed at 2012-04-17T21:41:36Z

#1676 closed defect (fixed)

ERROR: AssertionError(), apparently on mutable publish during check/repair (current git)

| Reported by: | killyourtv | Owned by: | killyourtv |

|---|---|---|---|

| Priority: | critical | Milestone: | 1.9.2 |

| Component: | code-mutable | Version: | 1.9.1 |

| Keywords: | i2p mutable repair error assertion regression | Cc: | killyourtv@… |

| Launchpad Bug: |

Description (last modified by killyourtv)

Running a deep-check on one of my shares on the I2P grid yielded the following:

'debian/dists/squeeze/main/binary-i386/Release': healthy 'debian/dists/squeeze/main/binary-powerpc': healthy 'debian/dists/squeeze/main/binary-powerpc/Packages': healthy 'debian/dists/squeeze/main/binary-powerpc/Packages.bz2': not healthy repair successful 'debian/dists/squeeze/main/binary-powerpc/Packages.gz': healthy 'debian/dists/squeeze/main/binary-powerpc/Release': healthy 'debian/dists/squeeze/main/source': healthy 'debian/dists/squeeze/main/source/Release': healthy 'debian/dists/squeeze/main/source/Sources.gz': not healthy repair failed 'debian/pool': healthy 'debian/pool/dists': healthy 'debian/pool/dists/squeeze': healthy 'debian/pool/dists/squeeze/main': healthy 'debian/pool/dists/squeeze/main/binary-amd64': healthy ERROR: AssertionError() "[Failure instance: Traceback: <type 'exceptions.AssertionError'>: " $INSTALL_DIR/src/allmydata/mutable/filenode.py:563:upload $INSTALL_DIR/src/allmydata/mutable/filenode.py:661:_do_serialized /usr/lib/python2.7/dist-packages/twisted/internet/defer.py:298:addCallback /usr/lib/python2.7/dist-packages/twisted/internet/defer.py:287:addCallbacks --- <exception caught here> --- /usr/lib/python2.7/dist-packages/twisted/internet/defer.py:545:_runCallbacks $INSTALL_DIR/src/allmydata/mutable/filenode.py:661:<lambda> $INSTALL_DIR/src/allmydata/mutable/filenode.py:689:_upload $INSTALL_DIR/src/allmydata/mutable/publish.py:404:publish

In twistd.log, (I don't know if it's related, I was AFK), I see

2012-02-14 08:53:30+0000 [-] Unhandled error in Deferred: 2012-02-14 08:53:30+0000 [-] Unhandled Error Traceback (most recent call last): File "/usr/lib/python2.7/dist-packages/twisted/internet/base.py", line 800, in runUntilCurrent call.func(*call.args, **call.kw) File "/usr/lib/pymodules/python2.7/foolscap/eventual.py", line 26, in _turn cb(*args, **kwargs) File "/usr/lib/python2.7/dist-packages/twisted/internet/defer.py", line 362, in callback self._startRunCallbacks(result) File "/usr/lib/python2.7/dist-packages/twisted/internet/defer.py", line 458, in _startRunCallbacks self._runCallbacks() --- <exception caught here> --- File "/usr/lib/python2.7/dist-packages/twisted/internet/defer.py", line 545, in _runCallbacks current.result = callback(current.result, *args, **kw) File "/usr/lib/python2.7/dist-packages/nevow/appserver.py", line 181, in _cbFinishRender self.finishRequest( True ) File "/usr/lib/python2.7/dist-packages/nevow/appserver.py", line 176, in finishRequest server.Request.finish(self) File "/usr/lib/python2.7/dist-packages/twisted/web/http.py", line 866, in finish "Request.finish called on a request after its connection was lost; " exceptions.RuntimeError: Request.finish called on a request after its connection was lost; use Request.notifyFinish to keep track of this.

Attachments (1)

Change History (15)

Changed at 2012-02-14T11:18:49Z by killyourtv

comment:1 Changed at 2012-02-14T11:40:41Z by killyourtv

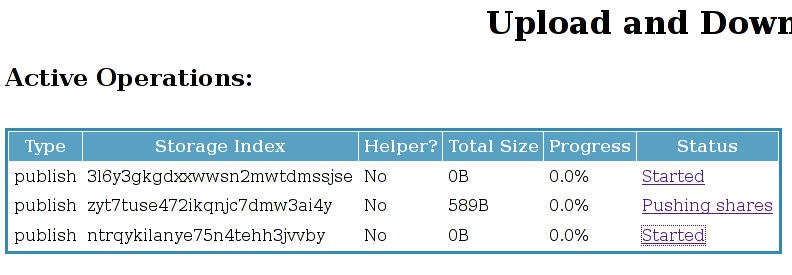

As mentioned in previous tickets, tasks remain in the Active Operations section long after failures occur, such as

The top two jobs failed around 12 hours earlier. The bottom one is the AssertionError? that I've reported in this ticket.

comment:2 Changed at 2012-02-14T11:41:28Z by killyourtv

- Description modified (diff)

comment:3 Changed at 2012-02-14T20:32:54Z by killyourtv

Update: For what it's worth, with the current git revision the deep-check consistently fails at the same point.

$ tahoe deep-check -v --repair --add-lease tahoe:debian/pool/dists/squeeze '<root>': healthy 'main': healthy 'main/binary-amd64': healthy ERROR: AssertionError() "[Failure instance: Traceback: <type 'exceptions.AssertionError'>: " $INSTALL_DIR/src/allmydata/mutable/filenode.py:563:upload $INSTALL_DIR/src/allmydata/mutable/filenode.py:661:_do_serialized /usr/lib/python2.7/dist-packages/twisted/internet/defer.py:298:addCallback /usr/lib/python2.7/dist-packages/twisted/internet/defer.py:287:addCallbacks --- <exception caught here> --- /usr/lib/python2.7/dist-packages/twisted/internet/defer.py:545:_runCallbacks $INSTALL_DIR/src/allmydata/mutable/filenode.py:661:<lambda> $INSTALL_DIR/src/allmydata/mutable/filenode.py:689:_upload $INSTALL_DIR/src/allmydata/mutable/publish.py:404:publish

Reverting back to 1.8.3:

$ tahoe deep-check -v --repair --add-lease tahoe:debian/dists/squeeze '<root>': healthy 'Contents-amd64': healthy 'Contents-amd64.bz2': healthy 'Contents-amd64.gz': healthy 'Contents-armel': healthy 'Contents-armel.bz2': healthy 'Contents-armel.gz': healthy 'Contents-i386': healthy 'Contents-i386.bz2': healthy 'Contents-i386.gz': healthy 'Contents-powerpc': healthy 'Contents-powerpc.bz2': healthy 'Contents-powerpc.gz': healthy 'InRelease': healthy 'Release': healthy 'Release.gpg': healthy 'main': healthy 'main/binary-amd64': healthy 'main/binary-amd64/Packages': healthy 'main/binary-amd64/Packages.bz2': healthy 'main/binary-amd64/Packages.gz': healthy 'main/binary-amd64/Release': healthy 'main/binary-armel': healthy 'main/binary-armel/Packages': healthy 'main/binary-armel/Packages.bz2': healthy 'main/binary-armel/Packages.gz': healthy 'main/binary-armel/Release': healthy 'main/binary-i386': healthy 'main/binary-i386/Packages': healthy 'main/binary-i386/Packages.bz2': healthy 'main/binary-i386/Packages.gz': healthy 'main/binary-i386/Release': healthy 'main/binary-powerpc': healthy 'main/binary-powerpc/Packages': not healthy repair successful 'main/binary-powerpc/Packages.bz2': healthy 'main/binary-powerpc/Packages.gz': healthy 'main/binary-powerpc/Release': healthy 'main/source': healthy 'main/source/Release': healthy 'main/source/Sources.gz': healthy done: 40 objects checked pre-repair: 39 healthy, 1 unhealthy 1 repairs attempted, 1 successful, 0 failed post-repair: 40 healthy, 0 unhealthy

comment:4 Changed at 2012-02-14T20:35:44Z by davidsarah

- Keywords mutable repair error assertion added

- Milestone changed from undecided to 1.10.0

- Summary changed from ERROR: AssertionError() (current git) to ERROR: AssertionError(), apparently on mutable publish during check/repair (current git)

comment:5 Changed at 2012-02-17T17:08:07Z by zooko

- Priority changed from major to critical

comment:6 Changed at 2012-02-18T20:23:54Z by kevan

- Owner changed from nobody to kevan

- Status changed from new to assigned

comment:7 Changed at 2012-02-19T03:19:11Z by kevan

If it's convenient, could you attach an incident report corresponding to this bug? I think I know what's causing this -- the Retrieve object doesn't keep track of whether it has successfully fetched, validated, and stored the private key when asked to do so -- but having logs would help to confirm that.

comment:8 Changed at 2012-03-05T22:35:49Z by zooko

- Keywords regression added

- Milestone changed from 1.10.0 to 1.9.2

This is a regression in 1.8→1.9, isn't it? I propose we put it into the "1.9.2" Milestone.

comment:9 follow-up: ↓ 10 Changed at 2012-04-07T20:48:44Z by warner

hey, is this maybe a dupe of #1689?

comment:10 in reply to: ↑ 9 Changed at 2012-04-08T04:04:10Z by davidsarah

- Component changed from unknown to code-mutable

- Owner changed from kevan to killyourtv

- Status changed from assigned to new

comment:11 Changed at 2012-04-14T18:52:47Z by killyourtv

Sorry for the delayed response.

Trying with trunk and the patchset from #68 applied (assuming I refactored it properly) yielded the following

'debian/dists/lenny/main/binary-i386/Packages.gz': healthy

'debian/dists/lenny/main/binary-i386/Release': healthy

'debian/dists/lenny/main/source': not healthy

repair successful

'debian/dists/lenny/main/source/Release': healthy

'debian/dists/lenny/main/source/Sources.gz': healthy

"ERROR: AttributeError('NoneType' object has no attribute 'callRemote')"

"[Failure instance: Traceback: <type 'exceptions.AttributeError'>: 'NoneType' object has no attribute 'callRemote'"

/usr/lib/python2.7/dist-packages/twisted/internet/defer.py:545:_runCallbacks

/usr/lib/python2.7/dist-packages/twisted/internet/defer.py:796:_cbDeferred

/usr/lib/python2.7/dist-packages/twisted/internet/defer.py:362:callback

/usr/lib/python2.7/dist-packages/twisted/internet/defer.py:458:_startRunCallbacks

--- <exception caught here> ---

/usr/lib/python2.7/dist-packages/twisted/internet/defer.py:545:_runCallbacks

$SRC/src/allmydata/mutable/servermap.py:1178:_check_for_done

$SRC/src/allmydata/mutable/servermap.py:1206:_send_more_queries

$SRC/src/allmydata/mutable/servermap.py:580:_do_query

$SRC/src/allmydata/mutable/servermap.py:601:_do_read

I'm currently trying again with just #1007 and #1010 applied.

comment:12 Changed at 2012-04-15T01:13:20Z by killyourtv

I re-refactored the patch from #68 (I think I made a mistake when I did it the first time earlier tonight).

The repair process on my rather large share has been going for a bit over an hour now. It's certainly going farther than it has at any other point with 1.9.x.

I'll post another update once it completes but so far it's looking very good!

comment:13 Changed at 2012-04-15T20:03:45Z by killyourtv

Several hours later

done: 664 objects checked pre-repair: 587 healthy, 77 unhealthy 77 repairs attempted, 77 successful, 0 failed post-repair: 664 healthy, 0 unhealthy

So far, so good. I'll stay on trunk to test a bit more.

comment:14 Changed at 2012-04-17T21:41:36Z by killyourtv

- Cc killyourtv@… added

- Resolution set to fixed

- Status changed from new to closed

I think this problem has been resolved. I'll close this ticket. Thanks, all!

active operations